Have you ever considered how search engines like Google and Bing are able to provide millions of relevant search results within a fraction of a second? The answer is through crawling and indexing. By determining which page on your website is best suited for the target audience and search terms entered, search engines can more easily display the appropriate page. However, to ensure search engines don't become confused about which page to show, it's important to avoid duplicate content and add canonical tags. In this blog, we'll explore the world of crawling and indexing, highlighting their significance and how they work together.

In the world of digital marketing, online marketing differs from traditional marketing and search engines play a significant role in gaining users' trust when searching for products or services online. It is essential to ensure that search engine bots can easily crawl your webpage and that your website is easily indexable to ease users' buying journey on search engines. Crawling and indexing are crucial components of an effective SEO plan & strategy. You can focus on vital points like SEO pagination and unique content to ensure your website is easily crawlable and indexable. Let's dive deeper into why this is essential and helps improve SEO campaign performance.

What is web crawling?

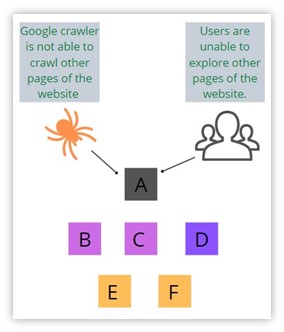

Web crawling is an automated explorer traversing the intricate pathways of the internet. Search engine bots, often referred to as “spiders”, are responsible for this task. Their primary purpose is to systematically visit the web pages, read their contents, and follow links to other pages. These bots act as the eyes of search engines, gathering data that forms the foundation of search results. The crawling process starts with a list of seed URLs, usually popular websites or pages known to provide valuable information. The spiders visit the final URLs, analyze the content, and then follow links to other pages. This iterative process continues, expanding the scope of the crawl. However, not all pages are crawled; factors like page quality, relevant content, and accessibility influence the bot’s decision.

What is indexing?

Once the spiders have gathered data from various corners of the internet, the next step is indexing. Indexing is essentially building an organized database of the information collected during the crawling process. It involves analyzing and processing the content of web pages to create a structured index that can be quickly searched. The index stores information about words or phrases found on web pages and their corresponding locations. This index is what enables search engines to retrieve relevant results in the blink of an eye. However, it’s important to note that search engines don’t store the entire content of web pages in their index; instead, they store essential information like keywords, metadata that stores information, and links. This streamlined approach allows for efficient and rapid retrieval of results when users perform searches.

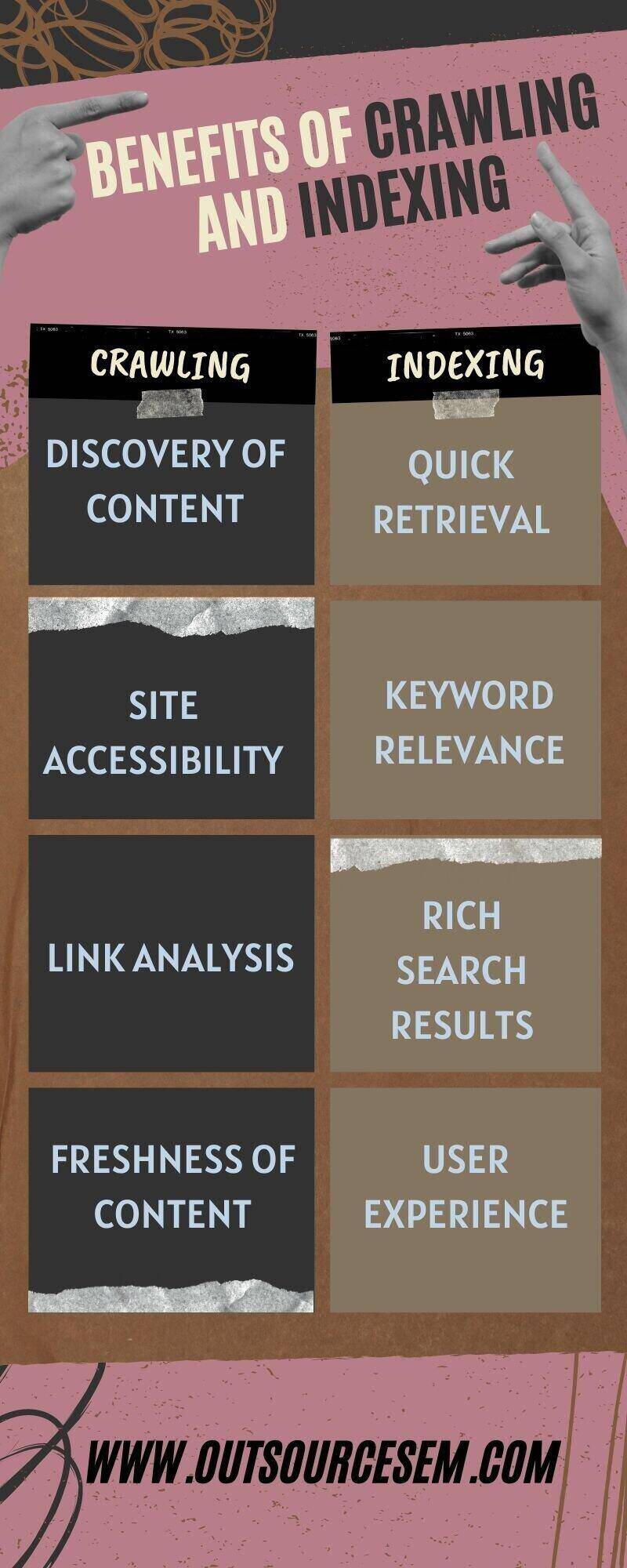

Benefits of web crawling in SEO

1. Discovery of content: Search engines use web crawling as a lighthouse to navigate the enormous internet environment. It makes sure that newly added and modified material is noticed. Without appropriate crawling, worthwhile blog posts, product pages, or fresh articles could remain in obscurity, missing out on chances to engage users and increase conversions. Search engine bots carefully navigate websites through methodical crawling to spot new information and updates to current pages. This dynamic procedure makes sure that the most recent data is taken into account for indexing, keeping websites current and relevant. To make your website accessible to crawlers, you can also outsource SEO and SEO analytics. It will also help to increase the conversion rate optimization.

2. Site accessibility: Imagine a library with locked doors – no matter how valuable the books inside, they won’t benefit anyone if no one can access them. Effective crawling ensures that search engine bots can access and explore a website’s content unimpeded. Broken links, poorly configured robots.txt files, or server errors can act as virtual barriers, preventing bots from fully traversing a site. By addressing these accessibility issues, businesses open their doors wide, allowing search engine bots to explore thoroughly and index all valuable content. The result is improved chances of proper indexing, better rankings, and increased visibility in search results.

3. Link analysis: Web crawlers follow the intricate web of links connecting various pages on a website. This link analysis is akin to mapping the roads between cities – it helps search engines understand a site's structure and hierarchy. Thoughtful internal linking not only aids users in navigating a website but also guides search engine bots toward important pages. By creating a logical network of interconnected content, businesses ensure that vital pages receive the attention they deserve during the indexing process. Link building can significantly influence how these pages are ranked and related in search results.

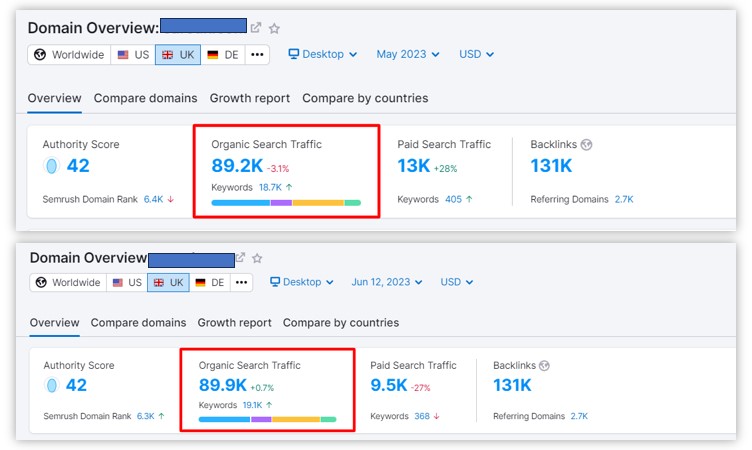

4. Freshness of content: Online material may go out-of-date and lose its relevance, just like a gourmet meal does when it is no longer fresh. Crawling on a regular basis guarantees that search engines always have access to the most recent data. More frequent crawls help websites that often add new material or update their old pages, which in turn maintains their content current in search engine indexes. Effective crawling is an essential tool since search engines place a great value on freshness, and frequently updated material can help to improve ranks and visibility in search results. For writing great content, you can outsource with a content marketing agency and also get help from a content specialist.

Benefits of indexing in SEO

A. Quick retrieval: Imagine an extensive library without a catalog system; it would be difficult to locate a certain book. Search engines can quickly obtain pertinent information in response to user requests thanks to indexing, which organizes the data gathered by web crawling. Users obtain precise and speedy search results because of this rapid retrieval method. Businesses benefit from speedy retrieval since it increases the likelihood that their important material will be shown to users looking for similar information, improving the search experience overall.

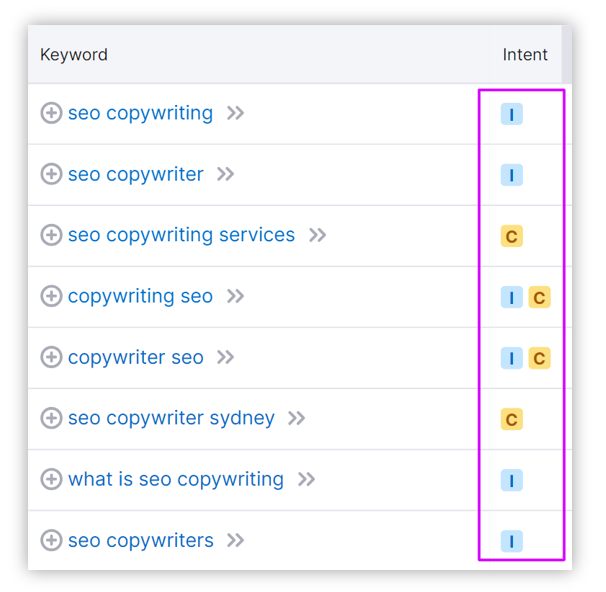

B. Keyword relevance: Keywords are the threads that tie content to user queries. Indexed content is associated with relevant keywords and phrases, enabling search engines to match user queries with the most suitable content. Integrating appropriate keywords in content ensures that it aligns with user intent and resonates with their search queries. A fully indexed, well-optimized piece of content has a greater chance of appearing prominently in search results, improving its visibility and drawing in more relevant visitors.

C. Rich search results: In the realm of search results, standing out from the crowd is key. Effective indexing can lead to the creation of rich search result features, such as featured snippets and knowledge panels. These features provide users with additional information directly in the search results, offering a glimpse into the content’s value. For businesses, achieving these rich features not only enhances visibility but also bolsters credibility. An indexed page that appears as a featured snippet becomes a showcase of expertise, attracting user attention and potentially driving higher click-through rates.

D. User experience: Imagine entering a store where everything is well-labeled and organized, making it simple for you to find what you're looking for. This feeling is replicated in the digital world by proper indexing. The user experience is enhanced by accurate indexing since users may get the information they need without having to filter through irrelevant results. Users are more likely to engage with the content, spend more time on a website, and perhaps perform desired actions like making a purchase or subscribing to a service when they can quickly find pertinent and reliable information. Proper indexing may create a great user experience that will boost conversions and improve user happiness.

Businesses may enhance their online exposure, increase user engagement, and increase conversion rate success by comprehending and using the numerous advantages of efficient web crawling and indexing. These procedures form the basis of a successful SEO plan, ensuring that material is not only present online but also discoverable, useful, and accessible to consumers all across the online world.

Key aspects of web crawling in SEO

Enhanced site-structure: A website’s structure serves as the foundation of effective web crawling. A well-organized architecture with a clear hierarchy of pages enhances the crawlability of a site. When the site content is categorized with backlinks and interlinked, search engine bots can navigate it more efficiently. An intuitive navigation structure ensures that both users and bots can easily access important content. You can also outsource for backlinking analysis which will enhance the site structure. Additionally, a well-structured website makes it easier for search engines to recognize the relevance and context of each page. By adopting a clear hierarchy and employing user-friendly URLs, businesses can optimize their site’s crawlability and enhance their chances of being indexed accurately.

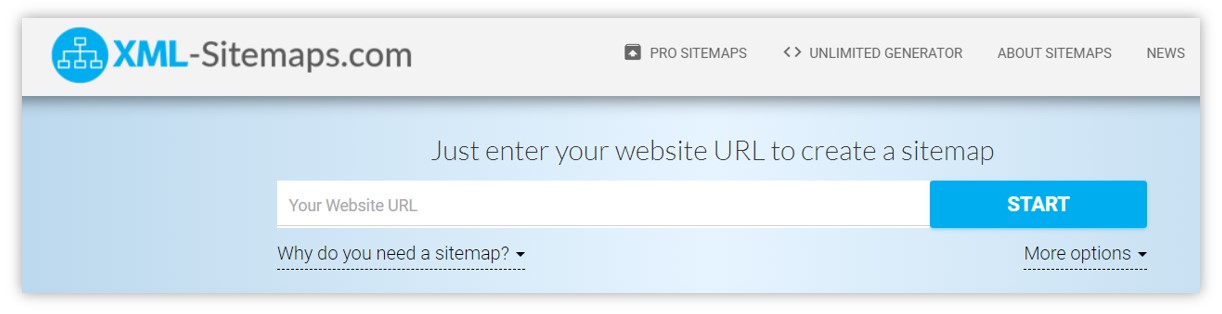

Sitemaps and SEO: XML sitemaps act as a crucial guide for search engine bots, enabling them to navigate a website’s landscape effectively. These sitemaps provide a comprehensive list of all the pages on a website, along with meta data that indicates the importance and frequency of updates for each page.

Submitting XML sitemaps to search engines, such as Google Search Console, and search engine optimization experts assists in ensuring that all pages are crawled and indexed. This proactive approach to guiding search engine bots through the site’s content improves the chances of important pages being discovered and indexed accurately. XML sitemaps are particularly beneficial for larger websites with intricate structures, ensuring that no valuable content is left unnoticed by search engines.

Robots.txt for precision: The robots.txt file acts as a gatekeeper, instructing search engine bots on which parts of a website they are allowed to crawl and which parts they should avoid. This file is especially useful for excluding sensitive content, such as login pages, admin sections, or certain directories that contain non-public information. By fine-tuning the robots.txt file, website owners can exercise control over what content is made accessible for crawling and indexing. Preventing the indexing of duplicate content or pages with thin or irrelevant content ensures that search engines focus on the most valuable and relevant parts of a website. It's essential to strike a balance between preventing sensitive content from being indexed and ensuring that critical content is accessible to search engine bots.

Incorporating these key aspects of web crawling optimization into a comprehensive SEO strategy can significantly enhance a website’s visibility in search results. A well-structured website, combined with effective utilization of sitemaps and a carefully configured robots.txt file, lays the groundwork for successful crawling and indexing, contributing to improved rankings and online presence.

Effective indexing techniques for SEO:

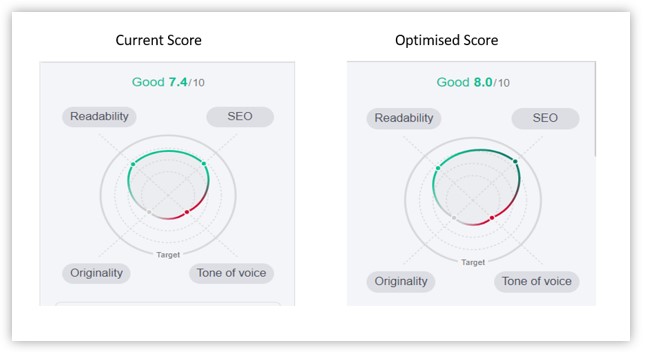

I. Keyword integration: Incorporating relevant keywords naturally within the content is a fundamental aspect of indexing optimization. Strategically placing keywords in a way that aligns with the content’s context helps search engines understand the topic and relevance of the page. However, it’s crucial to strike a balance-overloading content with keywords (keyword stuffing) can lead to penalties from search engines. Proper keyword integration ensures that the content is accurately indexed for the right queries, contributing to improved rankings.

II. Crafting metadata: Crafting compelling meta titles and descriptions is an art that goes beyond aesthetics. These snippets of information play a pivotal role in accurately indexing and presenting content in search results. A well-crafted meta title provides a concise and clear indication of the page’s topic, while the meta description provides a brief overview of the content. Engaging metadata not only enhances the likelihood of accurate indexing but also influences click-through rates, driving more organic traffic to the website.

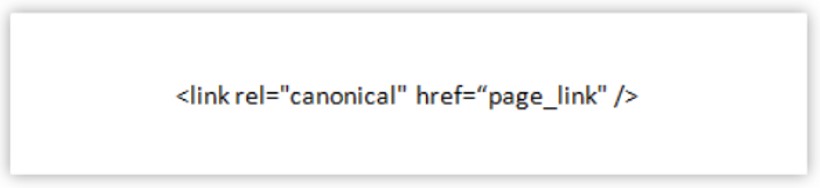

III. Canonical tags for duplication control: Duplicate content can pose challenges for accurate indexing. Canonical tags come to the rescue by indicating the preferred version of a page when multiple versions with similar content exist. This helps Google search index understand which version should be indexed, preventing indexing confusion and potential penalties for duplicate content. By employing canonical tags, website owners can ensure that the right version of their content is indexed, preserving the site's authority and relevance.

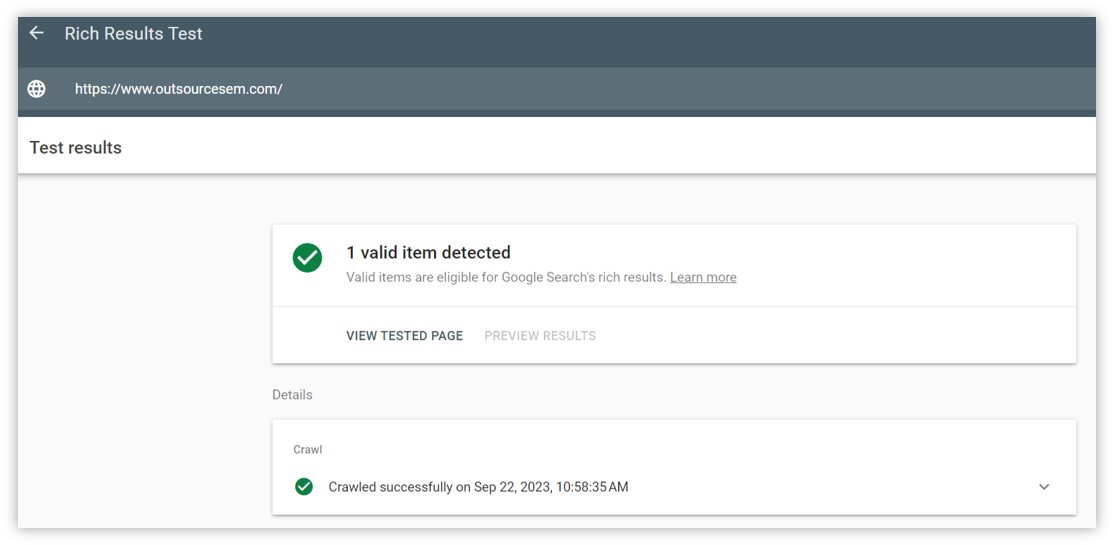

IV. Leveraging structured data: Structured data markup, often implemented using schemas like Schema.org, provides a way to offer additional context about content to search engines. This schema markup enhances the understanding of the content's purpose, making it easier for search engines to interpret and index the information accurately. Structured data also opens the door to rich search results features, such as rich snippets and knowledge panels, which enhance a website's visibility and credibility in search results.

V. Internal linking strategy: A well-thought-out internal linking strategy aids both user navigation and search engine indexing. Internal links guide search engine bots to essential pages within a website, ensuring that they are crawled and indexed appropriately. By establishing a web of interconnected content, internal linking enhances the overall crawlability and indexation efficiency of a website. Pages that are deeply buried within the site's structure can be easily discovered by search engine bots through these links, boosting their visibility in search results.

Incorporating these effective indexing techniques into an SEO strategy ensures that a website's content is not only accurately indexed but also presented optimally in search results. Precise indexing is the keystone that proves the worth of white label SEO services and assists customers in achieving their digital objectives. From proper keyword integration to structured data implementation, each technique contributes to elevating a website's online visibility, user engagement, and overall SEO performance.

Conclusion

The seamless operation of web crawling and indexing might often go unnoticed by users, but they are the cornerstones of our online search experience. Together, they enable search engines to shift through the vast digital landscape, bringing forth relevant and timely information to our fingertips. The significance of efficient crawling and accurate indexing cannot be overstated. SEO solutions provided by white label SEO companies help in client satisfaction and retention. In the world of PPC, it's the driving force behind delivering relevant content to users and optimizing costs. With white label SEO services, you can leverage the power of crawling and indexing that helps to transform the performance of your SEO website. Using the on-site SEO techniques, optimizing the website for local SEO, implementing proper indexing in SEO, etc., enhances the SEO ranking of your website using the web crawler tool. As for your PPC campaigns, you focus on proper PPC keyword research, campaign analysis, etc., to enhance the performance of the PPC campaigns. In the same way, you can avail of white label SEO services with SEO professionals who use the latest web crawler tools to enhance website ranking on SERPs and drive remarkable results. By hiring our digital marketers you can avail various other services for your other business like construction SEO, plumbing SEO, painting SEO, electrician SEO, appliance repair SEO and much more. Through these services online visibility of your business can be increased globally.

References:

. How search engines work: crawling, indexing, and ranking

. Website indexing for search engines: how does it work?