With the increasing number of websites or web pages, it becomes challenging for search engines to crawl and index web pages. The web now has almost 2 billion websites, and the number of pages can go up to trillions; thus, it would not be wrong to say that the internet is a sea of web pages. One of the crucial functioning of search engines is Crawling Indexing Ranking, but have you ever wondered if Google or any other search engines crawl and cache all the pages available on the internet? No, due to limited resources, Google cannot crawl all the web pages, and the crawl budget comes into play here.

The resources on the internet are infinite, but search engines have limited budgets. And this is the reason that search engine giants like Google itself are not able to crawl all the pages on the internet. It's a different matter that its parent company, Alphabet, has hit the $2 trillion market capitalization within two years of being a $1 trillion company in January 2020. Many publishers are entirely unaware if crawl errors and crawl budget have any effect on the ranking of the pages, whether it needs optimization or not. In this blog, we will discuss crawl error and crawl budget and their related terminologies, is there any effect of crawl budget & crawl error on the SEO ranking? And finally, how to optimize your crawl budget according to Google ranking best practices.

What are crawl errors and crawl budget, and their related terminologies?

Before exploring the definitions of the crawl errors and crawl budget, let's have a brief overview of the crawling and indexing of a website.

What are crawling and indexing?

When a search engine bot or crawler accesses a website's publicly available web pages, it is called crawling. When a bot follows links from page to page, it makes a copy of it and saves it on its server. At the same time, indexing is the process of analyzing, organizing, and storing the content of each page found during the crawling.

Crawl errors - Crawl errors refer to the issues a search engine bot faces while crawling a website; it can be a 4xx error, a 5xx error, etc. These types of errors on the website will prohibit the search engine spiders from crawling the website, resulting in indexing issues and, finally, not ranking for the specified query. Any type of crawl error the bot faces will immediately stop the crawling process.

Crawl budget - Crawl budget refers to the number of pages a search engine bot can crawl or want to crawl for a given website in a specified period. It's a Google call to allocate a budget for a website, but some factors like the website's size, server setup, frequency of site update, links, etc., affect the crawl budget; more on this we will discuss in the coming sections. Immediately after the specified budget is exhausted for a given website, Google crawler stops the process of crawling & indexing and moves to another website. It is good to know that each website's budget is different depending on the size, speed, relevancy, update frequency, etc.

The crawl budget is determined by two main elements: Crawl rate limit and crawl demand.

• Crawl rate limit - It is the frequency of crawling your website by a search engine bot. It can go up and down depending on the response from your website. If the site responds quickly, the limit goes up, meaning more connections can be used to crawl, whereas if the site responds slowly or has errors, it can go down.

• Crawl demand - The demand for crawling is usually high for the popular pages on the internet, pages that update frequently, and new pages to maintain their freshness.

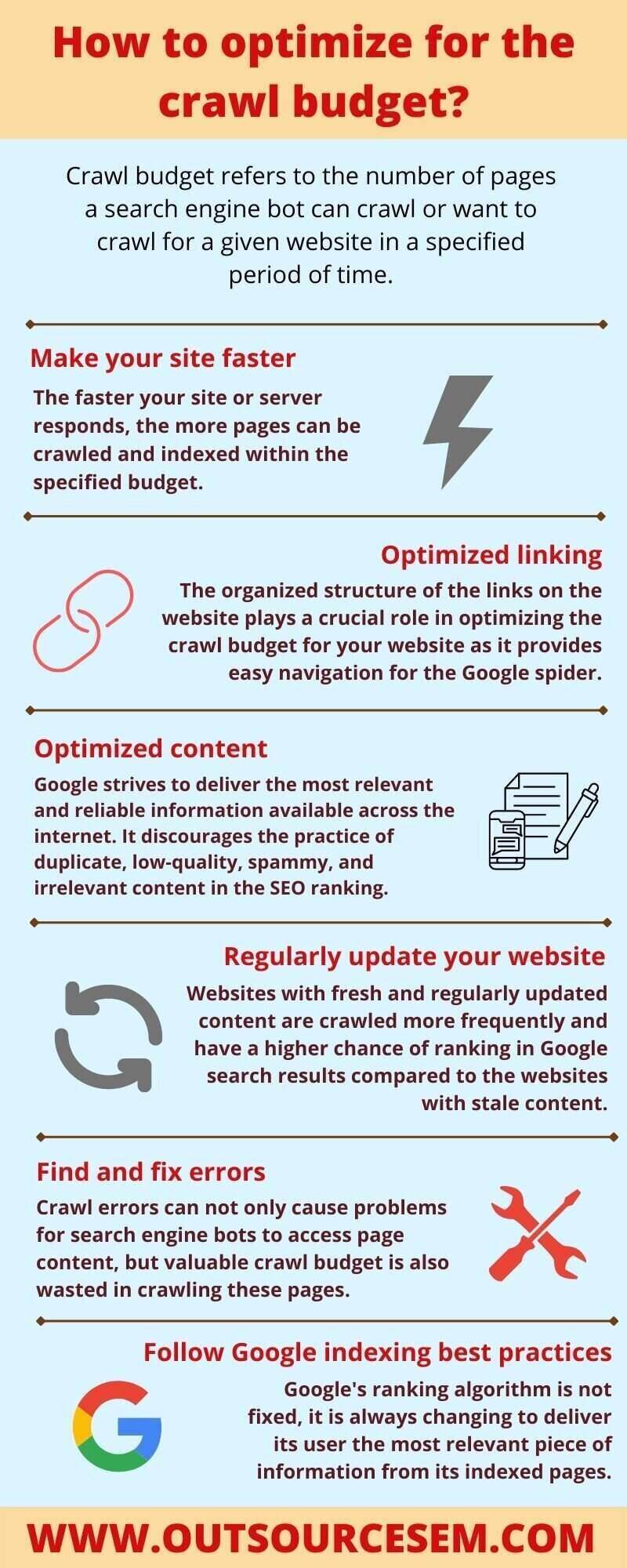

How to optimize for the crawl budget?

1. Make your site faster - The great thing about site speed is there is no limit to how fast your site responds. The faster your site or server responds, the more pages can be crawled and indexed within the specified budget; otherwise, there is a good chance that the budget is exhausted and the pages on your site are still left uncrawled. Websites that respond quickly are not only a method of crawl budget optimization. It is a good practice to enhance the user experience on your website, but you need to focus on keeping an eye that you do not spend a huge amount on a crawl budget. As much as you can optimize, it will benefit you more; thus, speeding up your website can be the first step towards crawl budget optimization.

2. Optimized linking - Organized structure of the links on the website plays a crucial role in optimizing the budget for your website as it provides easy navigation for the Google spider. This applies to both practices: internal linking and external linking. As you already know that the Google bot follows links from page to page. If it finds no links on the page or unorganized linking, it will cause issues while crawling and indexing the website. Thus it is highly recommended to maintain a good website link structure that enables crawlers to find pages easily and conveniently for human users. You can also outsource backlink analysis service to find backlinks of your website.

3. Optimized content - Google focuses on delivering the most relevant and reliable information available across the internet. And with the process of crawling and indexing, collects the information about each webpage, shortlists some of the pages that are most relevant for a searched query , and finally delivers results from that indexed pages. With this statement of Google, it should not take long for publishers to understand that there is no space for duplicate, low-quality, spammy and irrelevant content in the search engine optimization (SEO) ranking. And this is one of many reasons that few websites rank on SERPs for a specific keyword.

Nowadays, search engines, social media platforms, and almost all digital platforms are fighting the issue of fake content, which can severely degrade the UX on these platforms. Thus Google's algorithm is very strict towards fake content, duplicate content, spammy content, etc., affecting your crawl budget. Also, while crawling your website, if a Google bot finds this type of content, then the next time, it may lower your budget, as Google is not going to waste its valuable resources on caching content that is not helpful for the audience. Thus you have to avoid these content issues and optimize it according to SEO guidelines for Google ranking.

4. Regularly update your website - It is no secret that fresh and updated website content is a Google ranking factor. When it is crawled more frequently, it has a high chance of ranking on Google search results compared to stale web pages. Thus, when optimizing for the crawl budget, the update frequency of the websites cannot be overlooked. After every major change in the website, do not forget to notify Google that your site is updated recently. This can be done via XML sitemaps, structured data, etc. With the help of the Google Search Console, it will be included in the indexed pages. Along with these, it is also vital to update your sitemap every time a new page is added to the website and submit it to Google so that it is easily crawled and indexed by a bot.

5. Find and fix errors - Crawl errors cannot only cause issues for search engine bots accessing the content of the page; the valuable crawl budget is also wasted in crawling these pages. Then there may be the possibility that the next time Google may reduce the budget for your website. So it is your duty to keep your website error-free as much as possible to optimize for Google indexing and the human user. The most common errors that cause problems are 4xx and 5xx, which can be easily found and fixed with the help of any SEO tool. Along with these, more issues that occur and are responsible for draining your budget are wrong redirections, broken page issues, i.e., 404 errors, soft error pages, hacked pages, redirect chains (when an initial URL is redirected many times), etc. by fixing these issues you can significantly optimize for crawl budget.

It is true that for large websites with millions of pages, it is challenging to find and fix all errors on the website, for which you can use tools like GSC, which will be much helpful. Few errors cannot have much effect and may be ignored by the crawler. Still, if it is a regular issue, it needs to be rectified as much as possible, as when your crawl budget exhausts, the Google bot moves to another website, leaving your essential pages uncrawled. In order to tackle this issue, you can allow your authoritative pages in robots.txt so that they can be included in the indexed pages.

6. Follow Google indexing best practices - Google's ranking algorithm is not fixed. It is always changing to deliver its user the most relevant piece of information from its indexed pages. It analyzes and ranks websites based on multiple data points such as search history, location, search behavior, etc. Crawling and indexing is a complicated and expensive process for search engines, and this is getting more difficult as this number is still increasing. Since Google has to spend a heavy part of its resources in this crawling process, Google's indexing & ranking is going through a transition phase in which results are delivered on the accurate prediction model. With the help of AI and machine learning, users can get more personalized search results that are the most accurate for their queries. In different terms, you can say that Google is planning and investing in the future of crawling & indexing, which is entirely different from the present.

Personalized results are now becoming a Google ranking factor. The more you personalize, the more it will benefit. From 1 July 2019 Google SEO ranking algorithm is shifted to mobile-first indexing means the website optimized for mobile devices will be prioritized in Google indexing. Google or other search engines use indexing APIs, which will provide instant crawling, indexing, and discovery of your site content; only you have to notify them that your website is updated.

Crawl errors and crawl budget: do you need to worry?

Errors in any form are bad for the website, and this also applies to crawl errors, as these issues stop the bot from crawling the website. An uncrawled website is not indexed, and there will be no SEO ranking for the specific page. Also, the errors identified by crawlers will affect your budget as Google decreases it for the next time when your site is being crawled.

Now let's see if the crawl budget affects Google indexing and ranking or not. Before discussing the crawl budget as a ranking factor, Google, in its blog, states that crawling is not a ranking signal. It is vital to be in the results but not directly related to the SEO ranking on SERPs, as hundreds of other Google ranking factors are analyzed.

Small size websites that have less number of pages or few thousand URLs do not need to worry about the crawl budget as it will be crawled efficiently. Medium-sized websites with thousands of unique pages (approx. 10,000+) that frequently update on a daily basis or shorter need to optimize for crawl budget; this can generally be applicable for news or informational websites. Now, large websites with millions of unique pages that have content updated at a moderate rate (like once a week or a few times a month) also need to frame a strategy for crawl budget so that they can make space in Google's indexed pages.

Round off

Crawling and indexing is a process run by search engines to organize the information on the internet and deliver it to the audience in the most useful way; publishers have no role in it. And for the crawl budget, it is not something that most publishers should worry about; only they have to stick to the fundamentals of the SEO ranking guidelines and optimize for other Google ranking factors. In addition, they have to also work on avoiding the crawl errors on the page as a significant amount of low-value URLs affect crawlability and may also create problems for ranking your indexed pages. Yes, websites with thousands or millions of pages that update with high frequency should focus on optimizing for crawl budget.

As an SEO service provider company, we can significantly assist you in scaling up your business with our cost-efficient and best-in-class SEO services offered by a team of SEO experts, like SEO audit services, SEO analytics services, detox, and penalty removal services, link-building services, local SEO, digital analytics, keyword research, website conversion analysis and many more. Our SEO team here at OutsourceSEM possesses a deep understanding of different industries and provide various SEO services like electricians SEO, flooring SEO, roofing SEO, lawyer SEO, small business SEO and much more for your home business for increasing website traffic and conversions.

References

1. Crawl errors & crawl budget: are they Google ranking factors?

2. What is crawl budget and should SEOs worry about it?

3. 7 tips to optimize crawl budget for SEO